Unity is joining the rest of the gang in providing generative AI tools for its users, but has been careful (unlike some) to ensure those tools are built on a solidly non-theft-based foundation. Muse, the new suite of AI-powered tools, will start with texture and sprite generation, and graduate to animation and coding as it matures.

The company announced these features alongside a cloud-based platform and the next big version of its engine, Unity 6, at its Unite conference in San Francisco. After a tumultuous couple months — a major product plan was totally reversed and the CEO ousted — they’re probably eager to get back to business as usual, if that’s even possible.

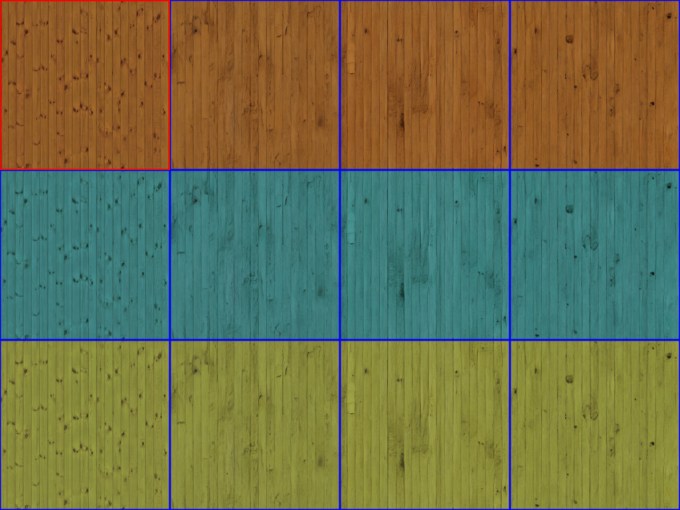

Unity has previously positioned itself as the champion of smaller developers who lack the resources to employ a more wide-ranging development platform like rival Unreal. As such the use of AI tools could be seen as a helpful addition to devs who can’t, for instance, afford to spend days making 32 slightly varying wood wall textures in high definition.

Although plenty of tools exist to help generate or mutate such assets, being able to say “make more like this” without leaving your main development environment is frequently preferable. The simpler the workflow, the more one can do without worrying about details like formatting and siloed resources.

AI assets are often used in prototyping as well, where things like artifacts and slightly janky quality — generally present no matter the model these days — aren’t of any real importance. But having your gameplay concept illustrated with original, appropriate art rather than stock sprites or free sample 3D models might make the difference in getting one’s vision across to publishers or investors.

Examples of sprites and textures generated by Unity’s Muse.

Another new AI feature, Sentis, is a little harder to understand — Unity’s press release says it “enables developers to bring complex AI data models into the Unity Runtime to create new gameplay experiences and features.” So it’s a BYO model kind of thing, with some built in functionality, and is currently in open beta.

AI for animation and behaviors is on the way, to be added next year. These highly specialized scripting and design processes could benefit greatly from a generative first draft or multiplicative helper.

Image Credits: Unity

A big part of this release, Unity’s team emphasized, was making sure these tools didn’t live in the shadow of IP infringement cases to come. As fun as image generators like Stable Diffusion are to play with, they’re built using assets of artists who never consented to have their work ingested and regurgitated.

“In order to provide useful outputs that are safe, responsible, and respectful of other creators’ copyright, we challenged ourselves to innovate in our training techniques for the AI models that power Muse’s sprite and texture generation,” reads a blog post on responsible AI techniques accompanying the announcement.

The company said that it used a completely custom model trained on Unity-owned or licensed imagery. Though they did use Stable Diffusion to, essentially, generate a larger synthetic dataset from the smaller curated one they had assembled.

Image Credits: Unity

For example, this wood wall texture might be rendered in several variations and color types using the Stable Diffusion model, but no new content is added, or at least that is how they described it as working. As a result, however, the new dataset is not just based on responsibly-sourced data, but is one step removed from it, reducing the likelihood that a particular artist or style is replicated.

This approach is safer, but Unity admitted it did lower quality in the initial models it is making available. As noted above, however, the actual quality of generated assets is not always of high importance.

Unity Muse will cost $30 per month as a standalone offering. We’ll soon hear from the community, no doubt, on whether the product justifies its price.

Source link